Along with other functions such as automated text reuse identification, the “Text Tools” plugin for ctext.org can use the ctext API to import textual data from ctext.org directly for analysis with regular expressions. A step-by-step online tutorial describes how to actually use the tool (see also the instructions on the tool’s own help page); here I will give some concrete examples of what the tool can be used to do.

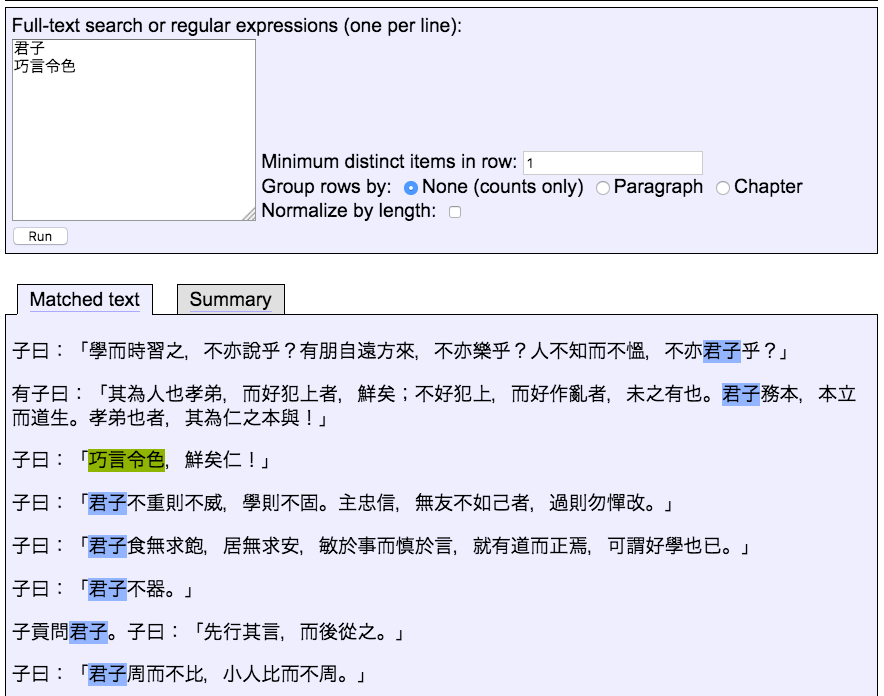

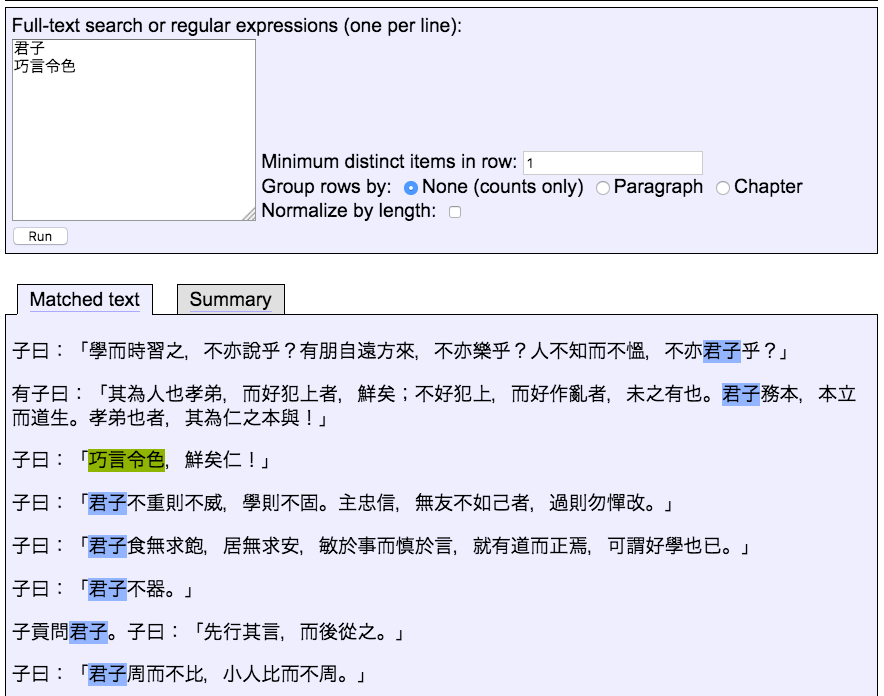

Regular expressions (often shortened to “Regexes”) are a powerful extension of the type of simple string search widely available in computer software (e.g. word processors, web browsers, etc.): a regular expression is a specification of something to be matched in some body of text. At their simplest, regular expressions can be simply strings of characters to search for, like “君子” or “巧言令色”. At its most basic, you can use Text Tools to search for multiple terms within a text by entering your terms one per line in the “Regex” tab:

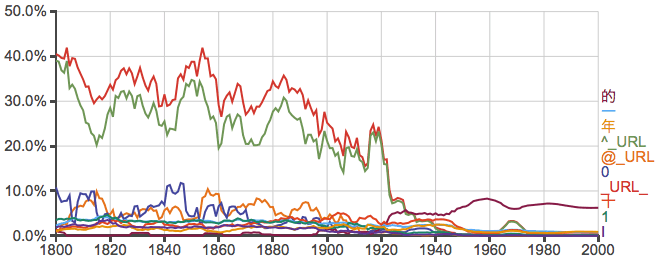

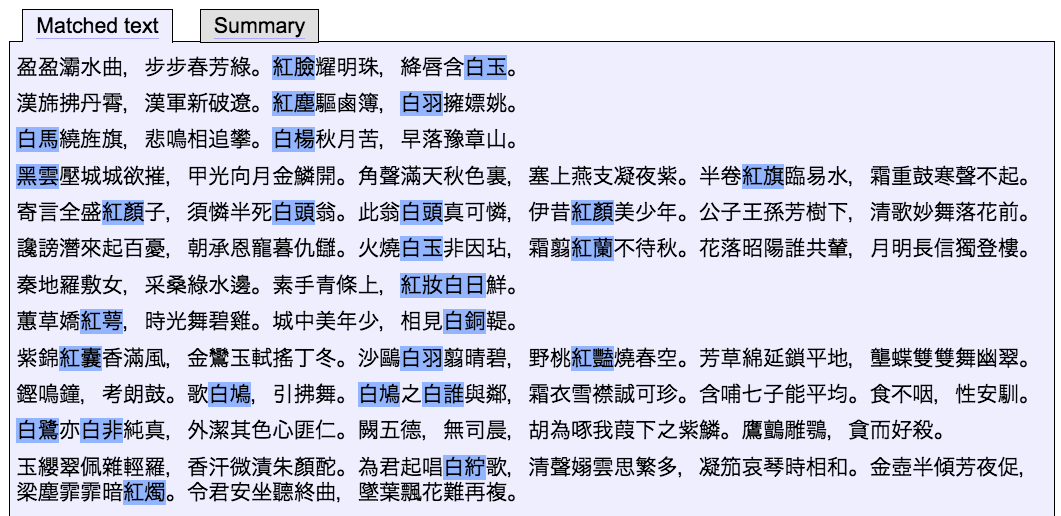

Text Tools will highlight each match in a different color, and show only the paragraphs with at least one match. Of course, you can specify as many search terms as you like, for example:

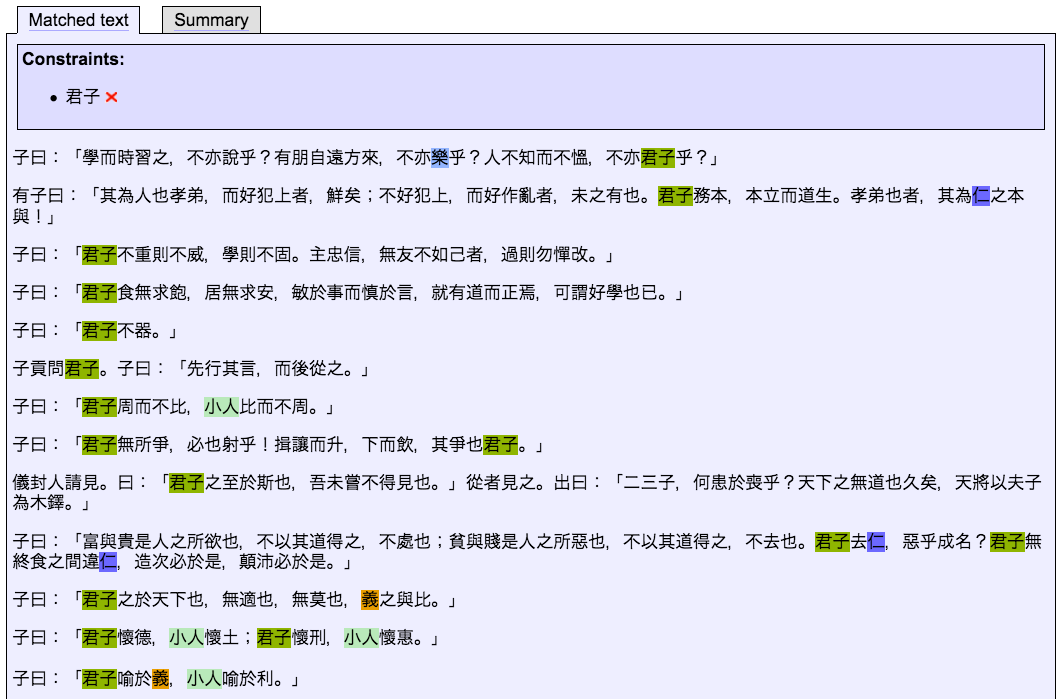

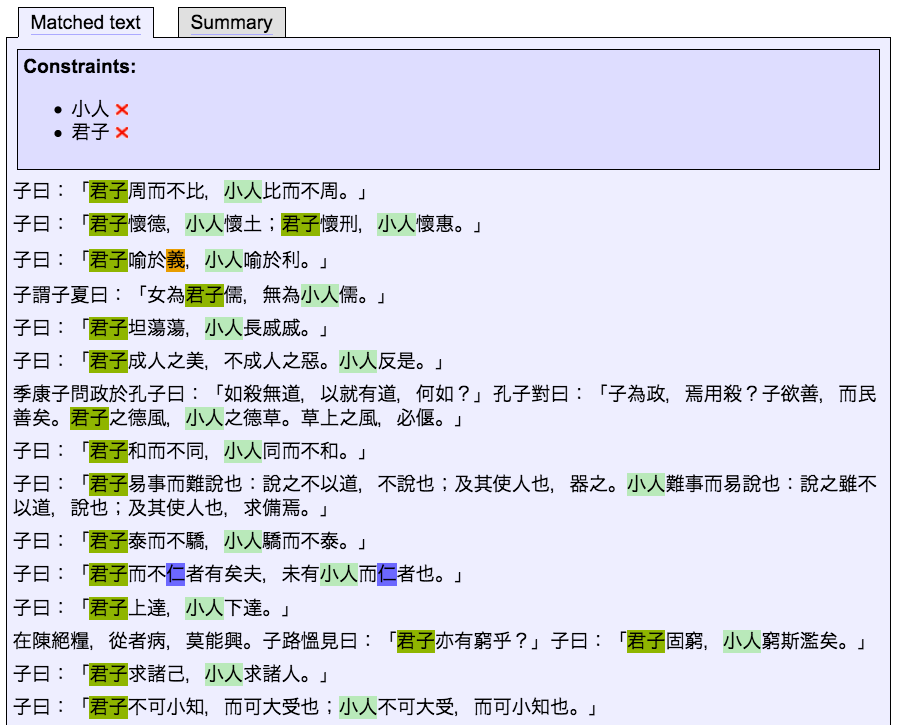

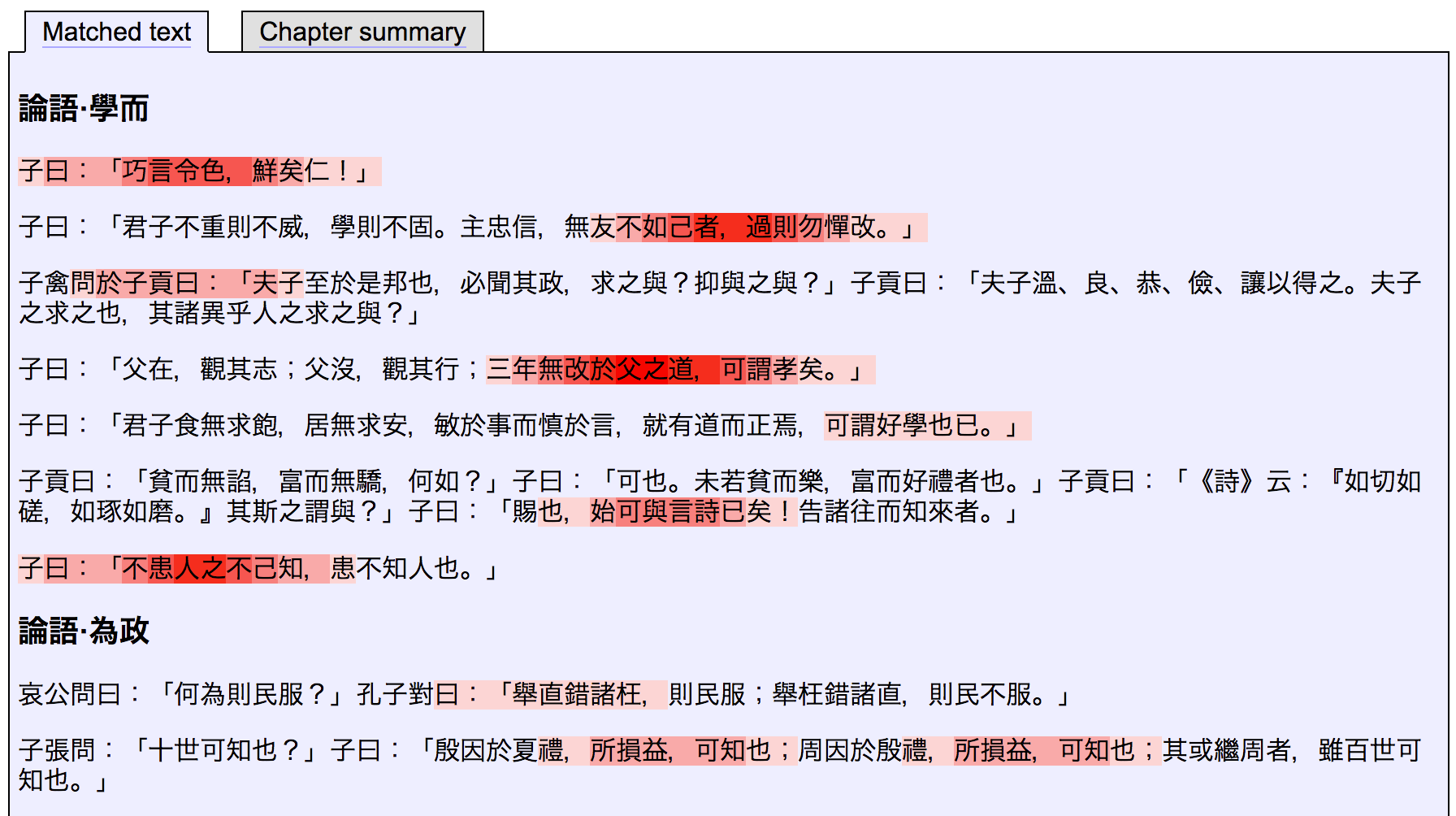

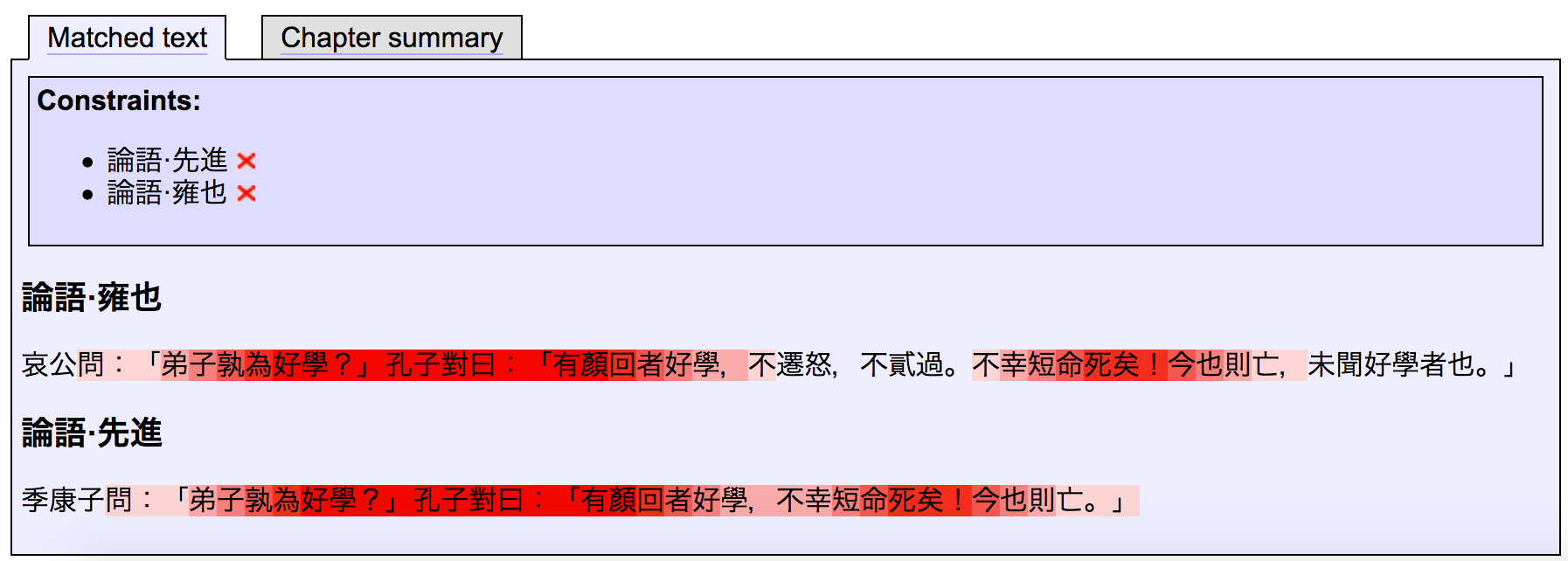

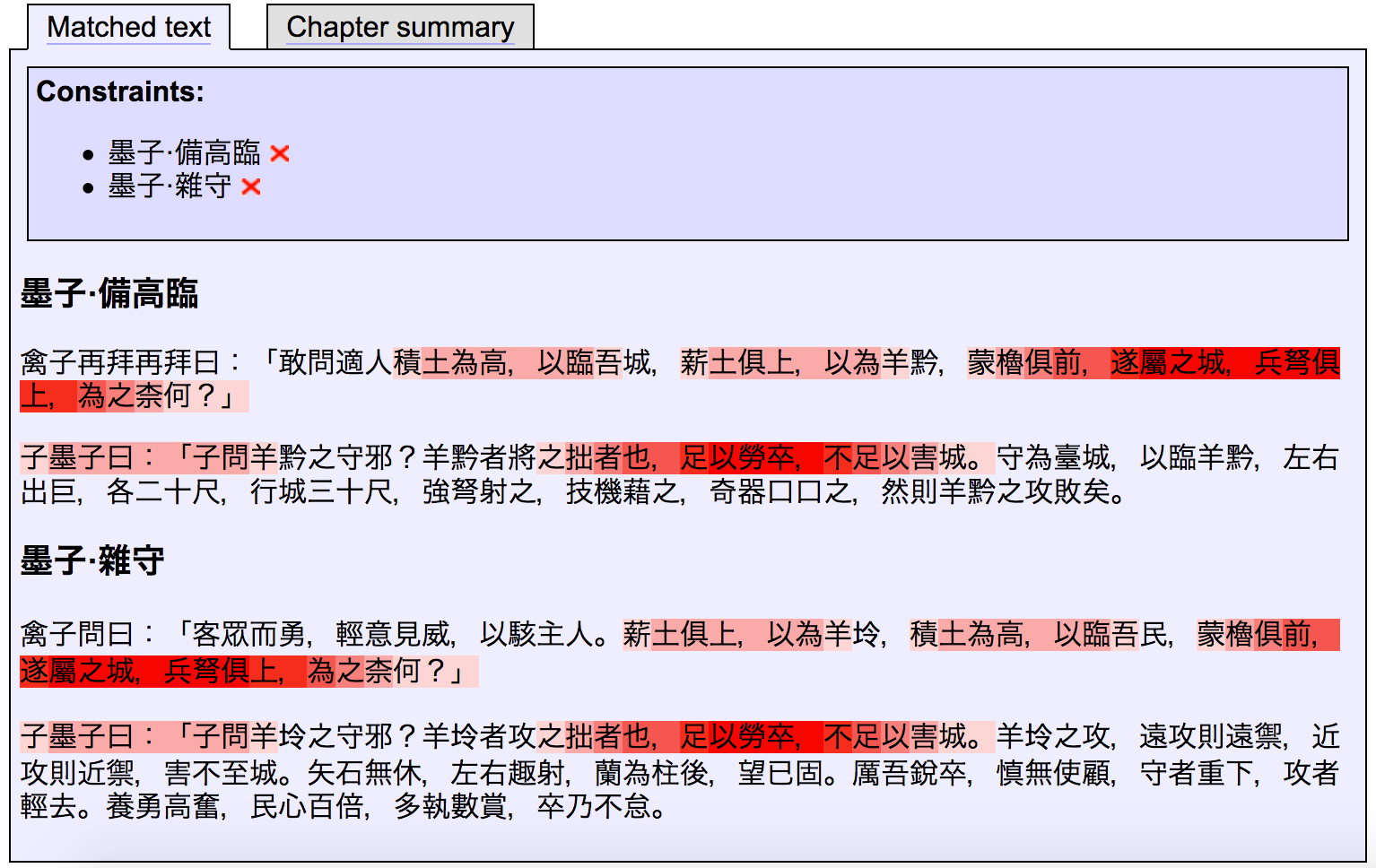

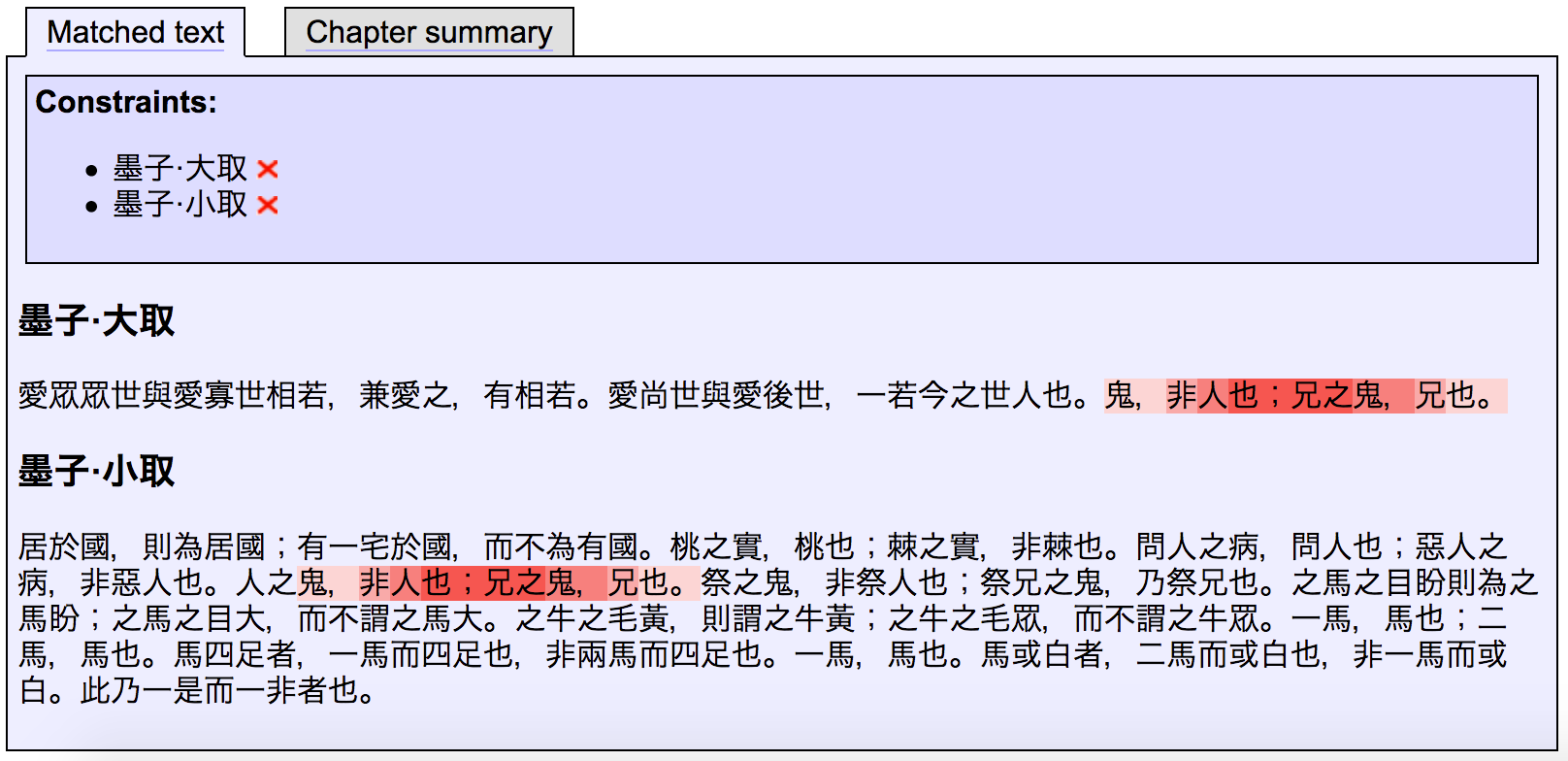

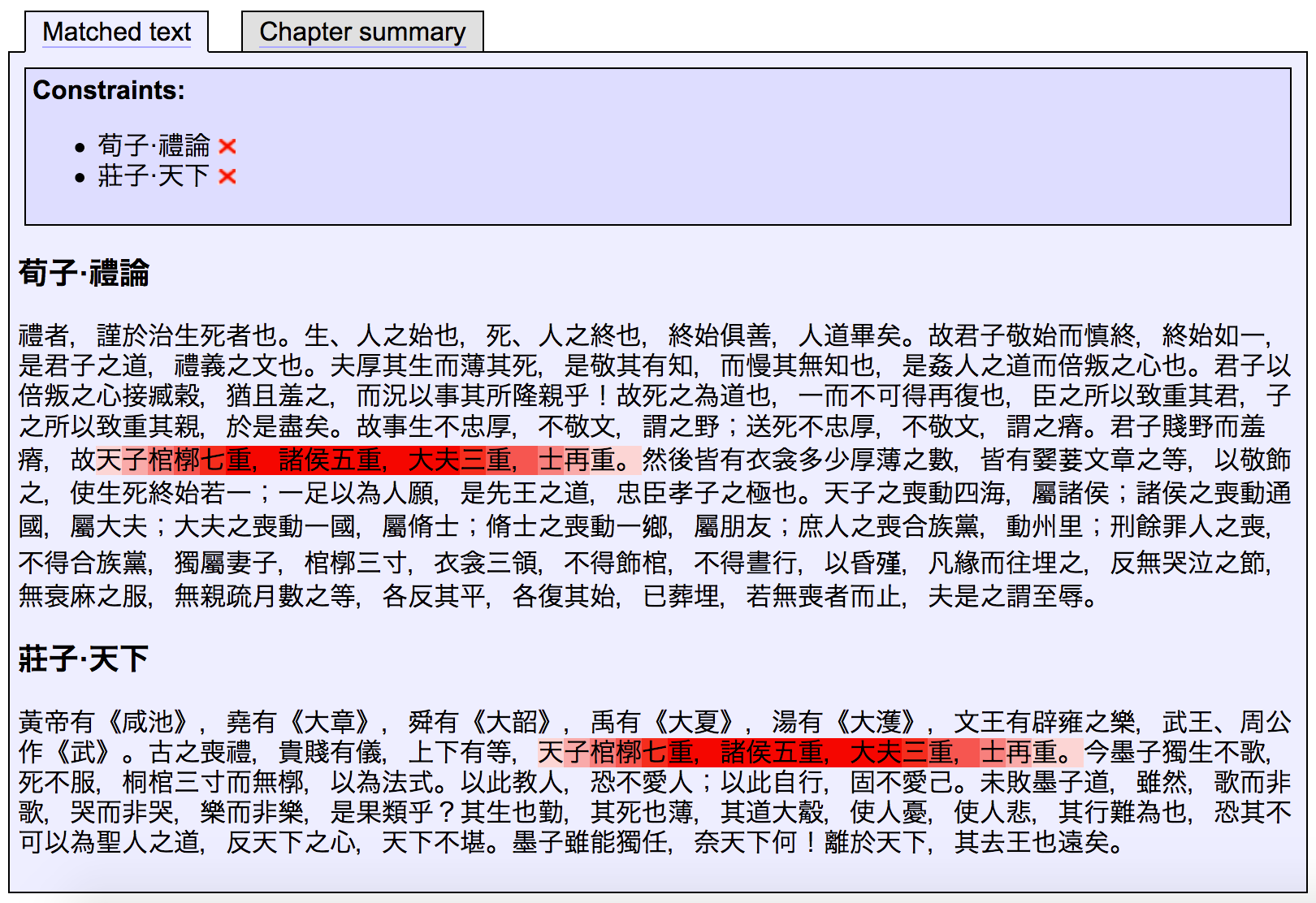

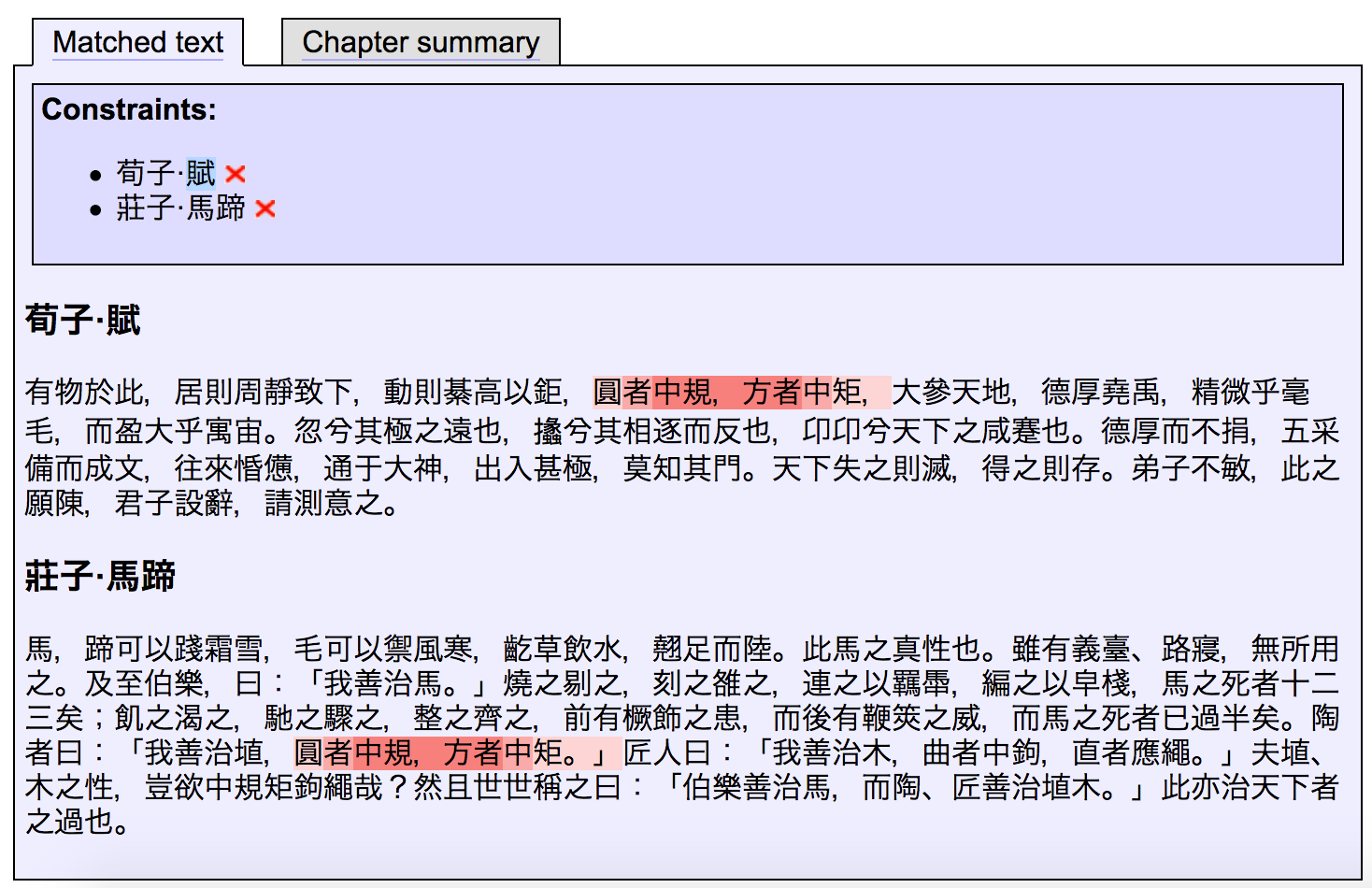

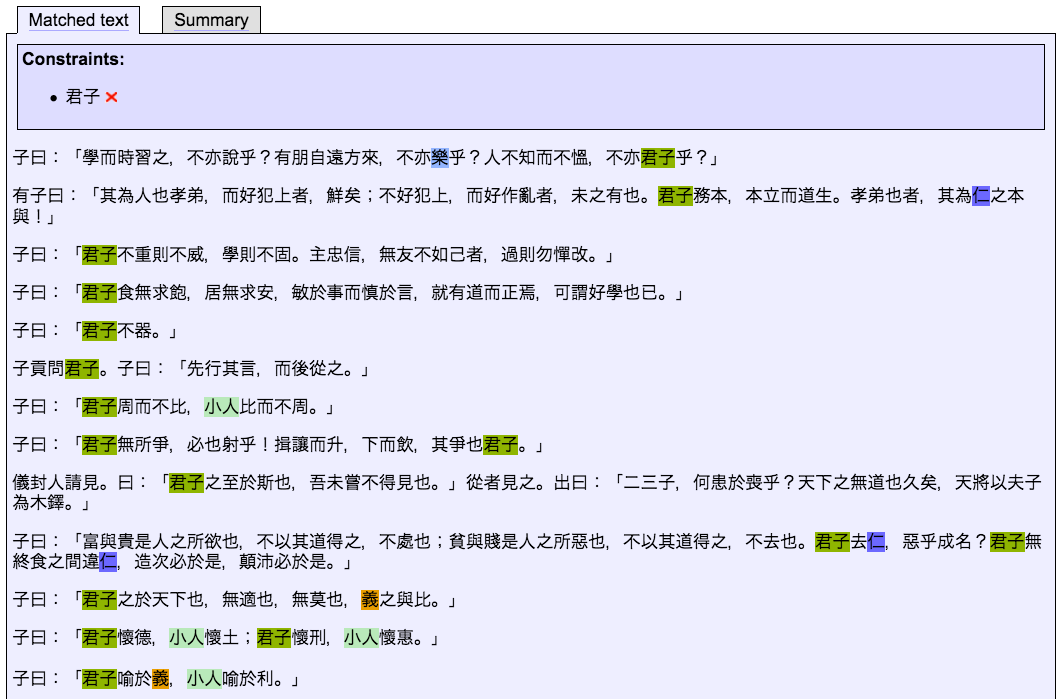

Clicking on any of the matched terms adds it as a “constraint”, meaning that only passages containing that term will be shown (though still highlighting any other matches present). For instance, clicking “君子” will show all the passages with the term “君子” in them, while still highlighting any other matches:

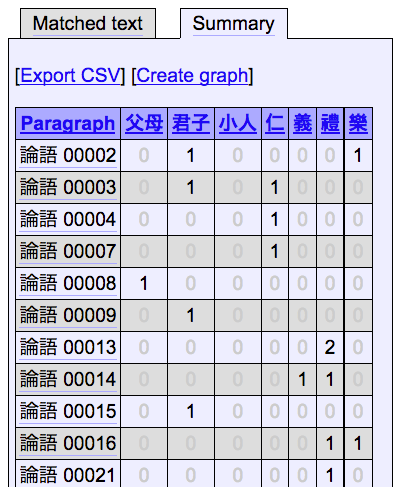

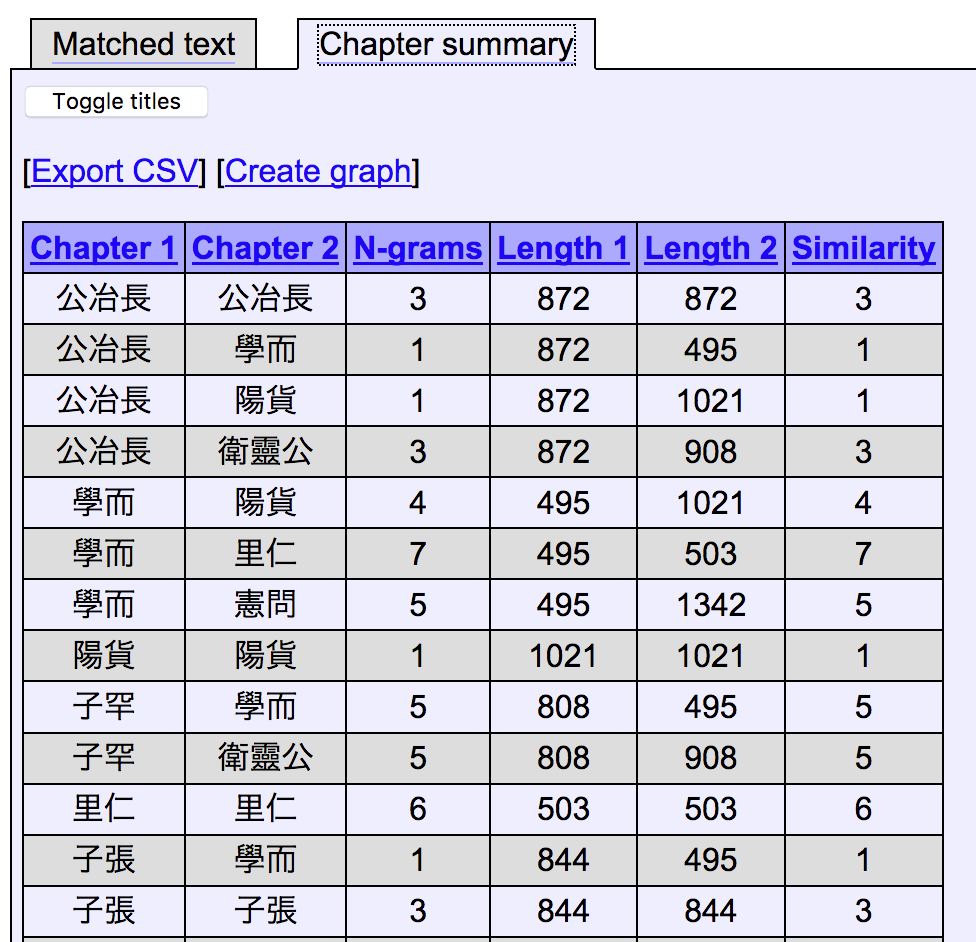

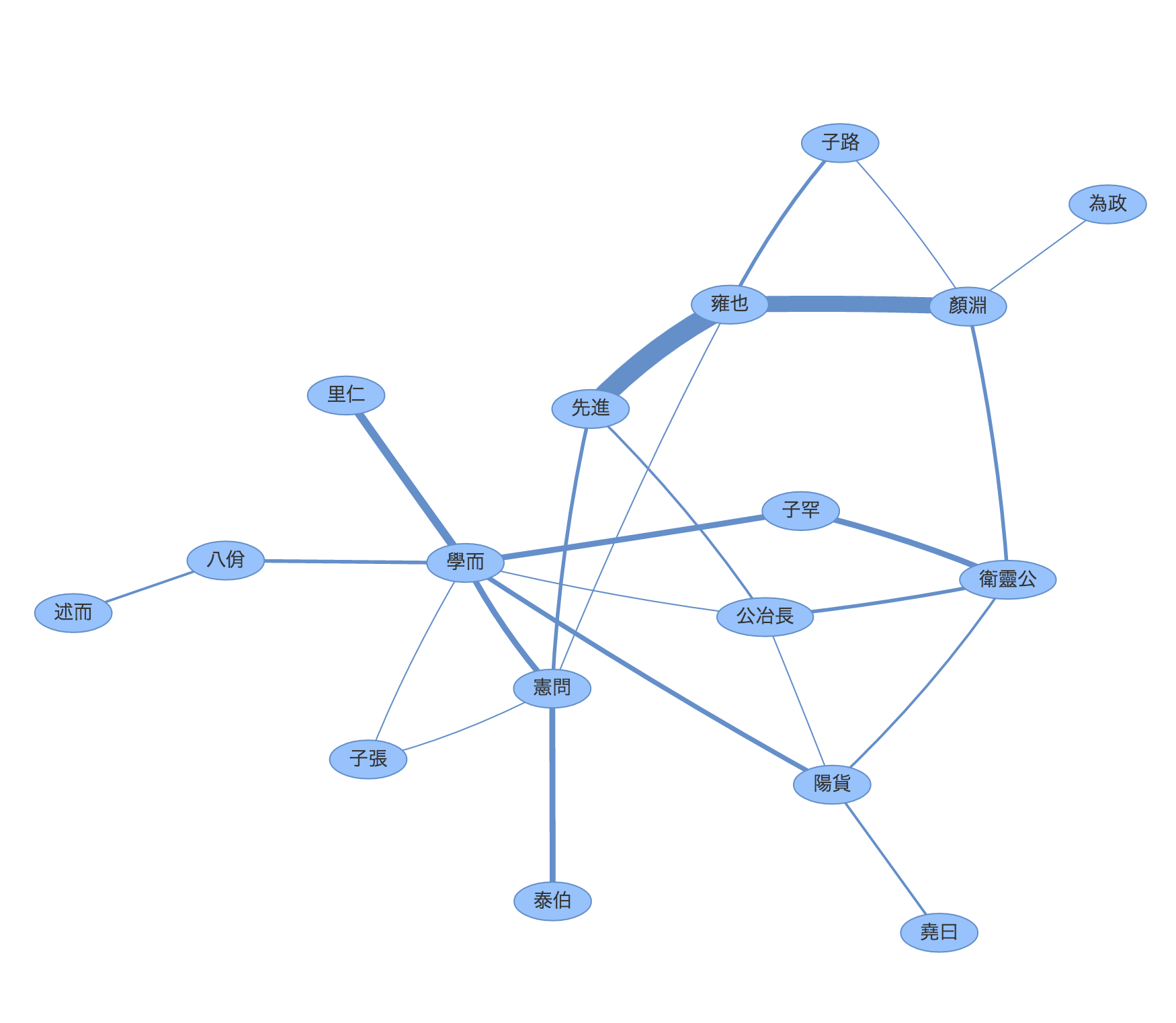

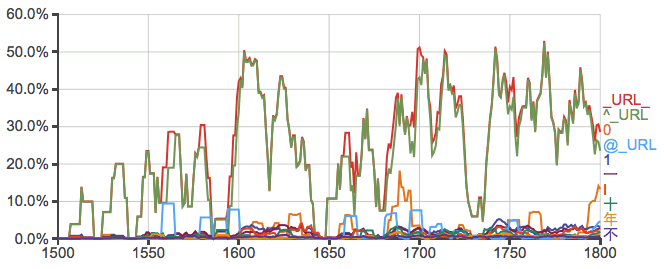

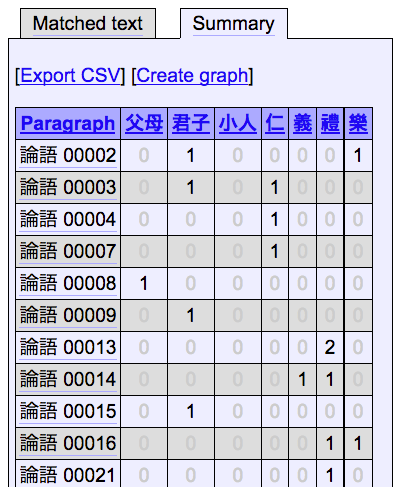

As with the similarity function of the same plugin, if your regular expression query results in relational data, this can be visualized as a network graph. This is done by setting “Group rows by” to either “Paragraph” or “Chapter”, which gives results in the “Summary” tab tabulated by paragraph (or chapter) – each row represents a paragraph which matched a term, and each column corresponds to one of the matched items:

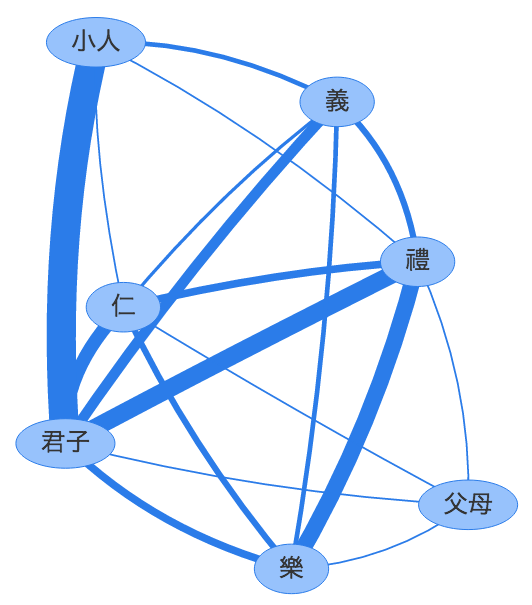

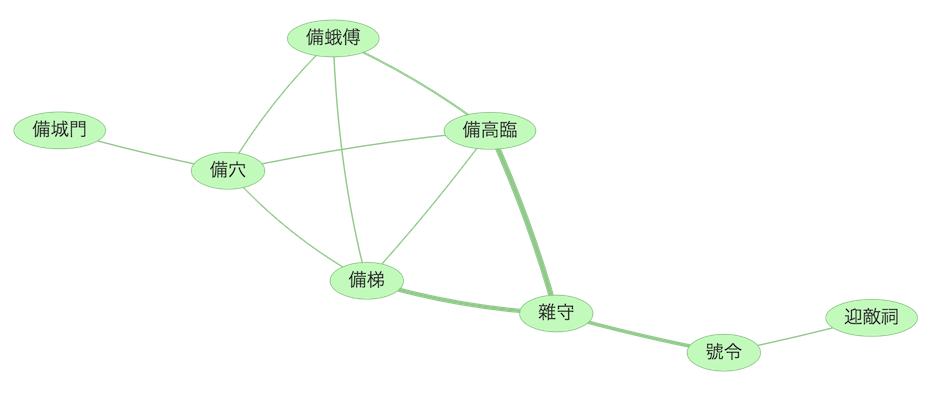

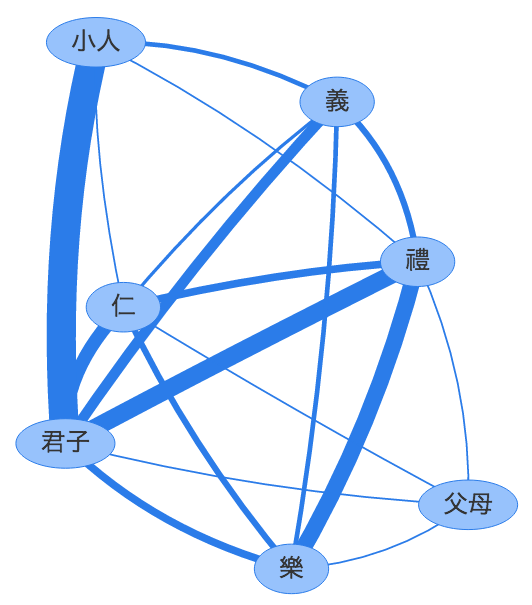

This can be visualized as a network graph in which edges represent co-occurrence of terms within the same paragraph, and edge weights represent the number of times such co-occurrence is repeated in the texts selected:

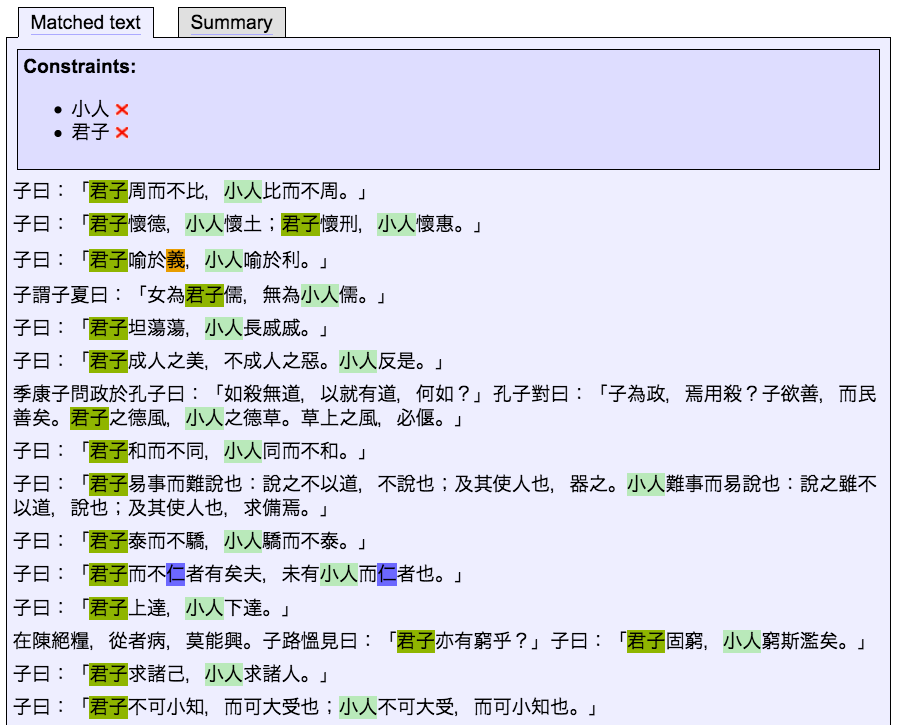

This makes it clear where the most frequently repeated co-occurrences occur in the selected corpus – in this example, “君子” and “小人”, “君子” and “禮”, etc. Similarly to the way in which similarity graphs created with the Text Tools plugin work, double-clicking on any edge in the graph returns to the “Regex” tab with the two terms joined by that edge chosen as constraints, thus listing all the passages in which those terms co-occur, this being the data explaining the selected edge:

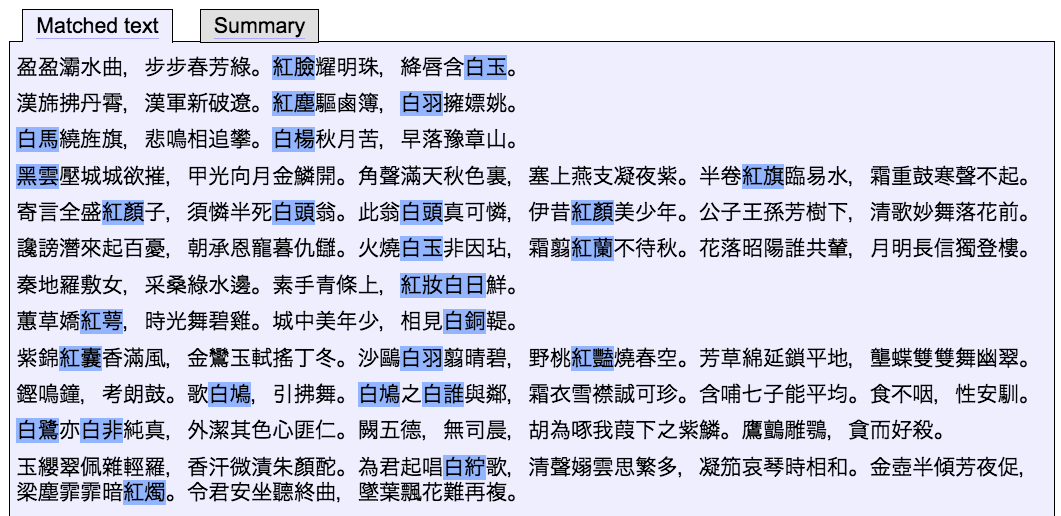

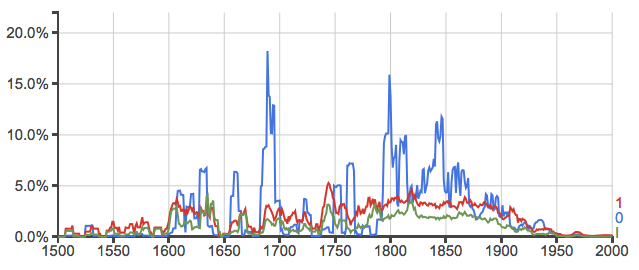

So far these examples have used fixed lists of search strings. But as the name suggests, the “Regex” tool also supports regular expressions, and so by making use of standard regular expression syntax, it’s possible to make far more sophisticated queries. [If you haven’t come across regular expressions before, some examples are covered in the regex section of the Text Tools tutorial.] For example, we could write a regular expression that matches any one of a specified set of color terms, followed by any other character, and see how these are used in the Quan Tang Shi (my example regex is “[黑白紅]\w”: match any one of “黑”, “白”, or “紅”, followed by one non-punctuation character):

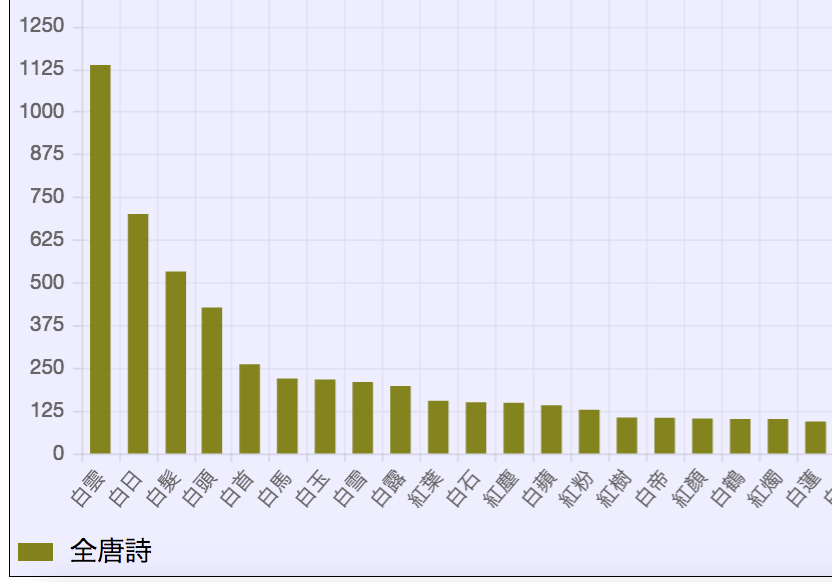

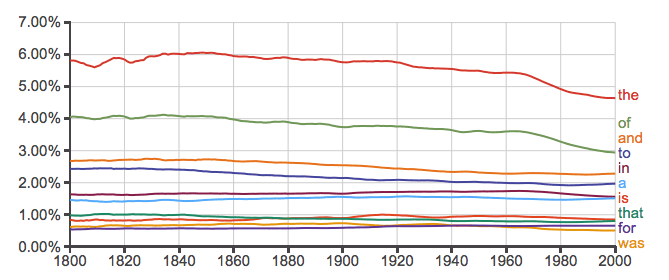

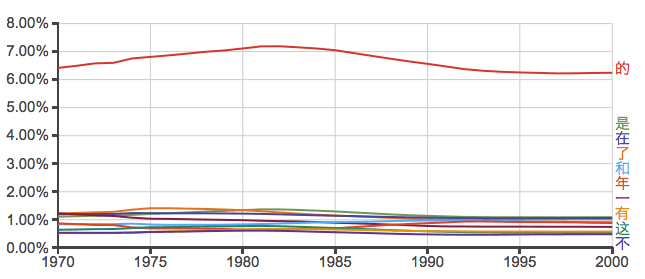

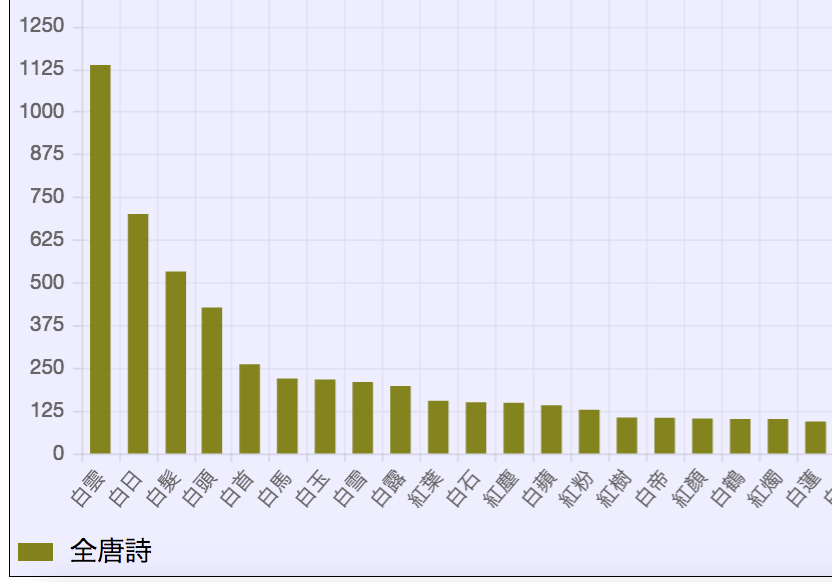

If we use “Group by: None”, we get total counts of each matched value – i.e. counts of how frequently “白雪”, “白水”, “紅葉”, and whatever other combinations there are occurred in our text. We can then use the “Chart” link to chart these results and get an overview of the most frequently used combinations:

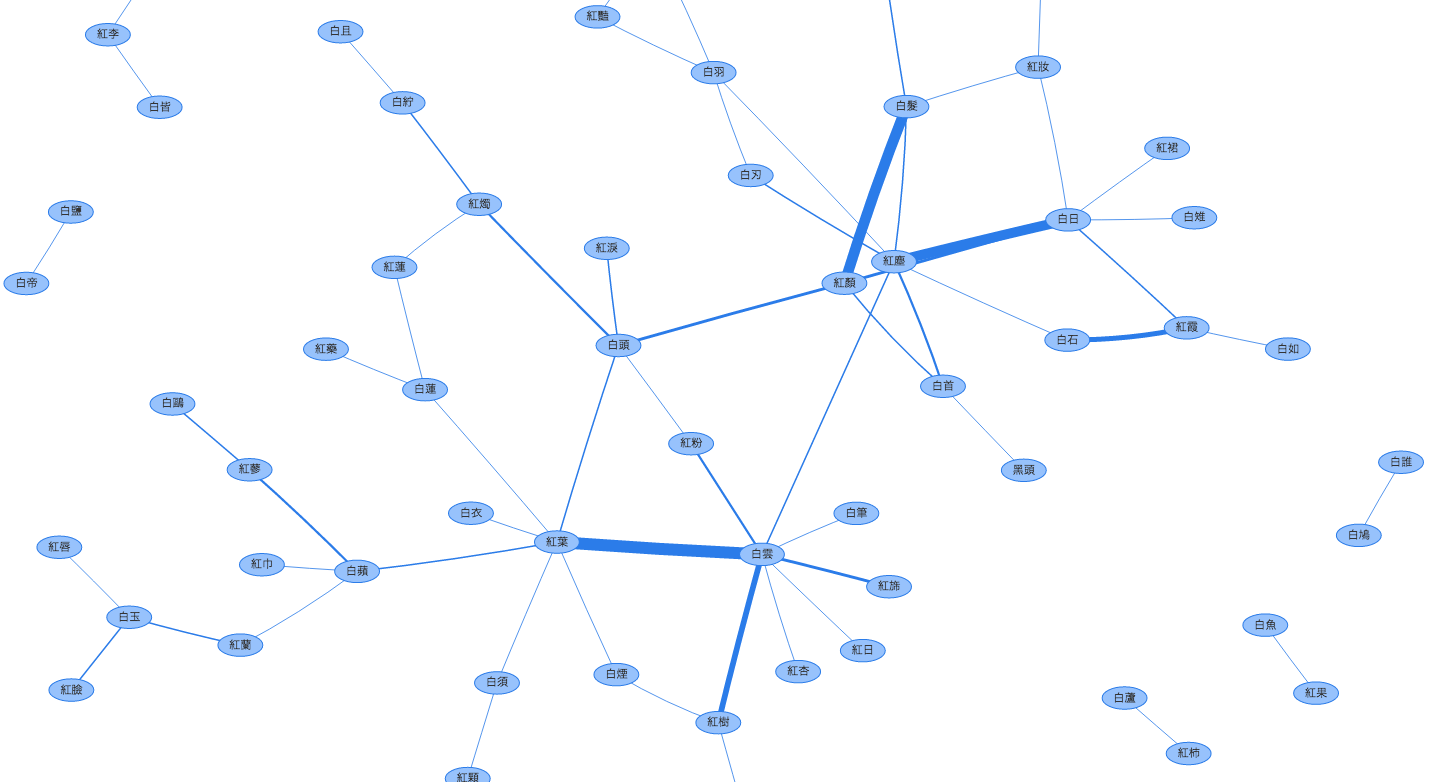

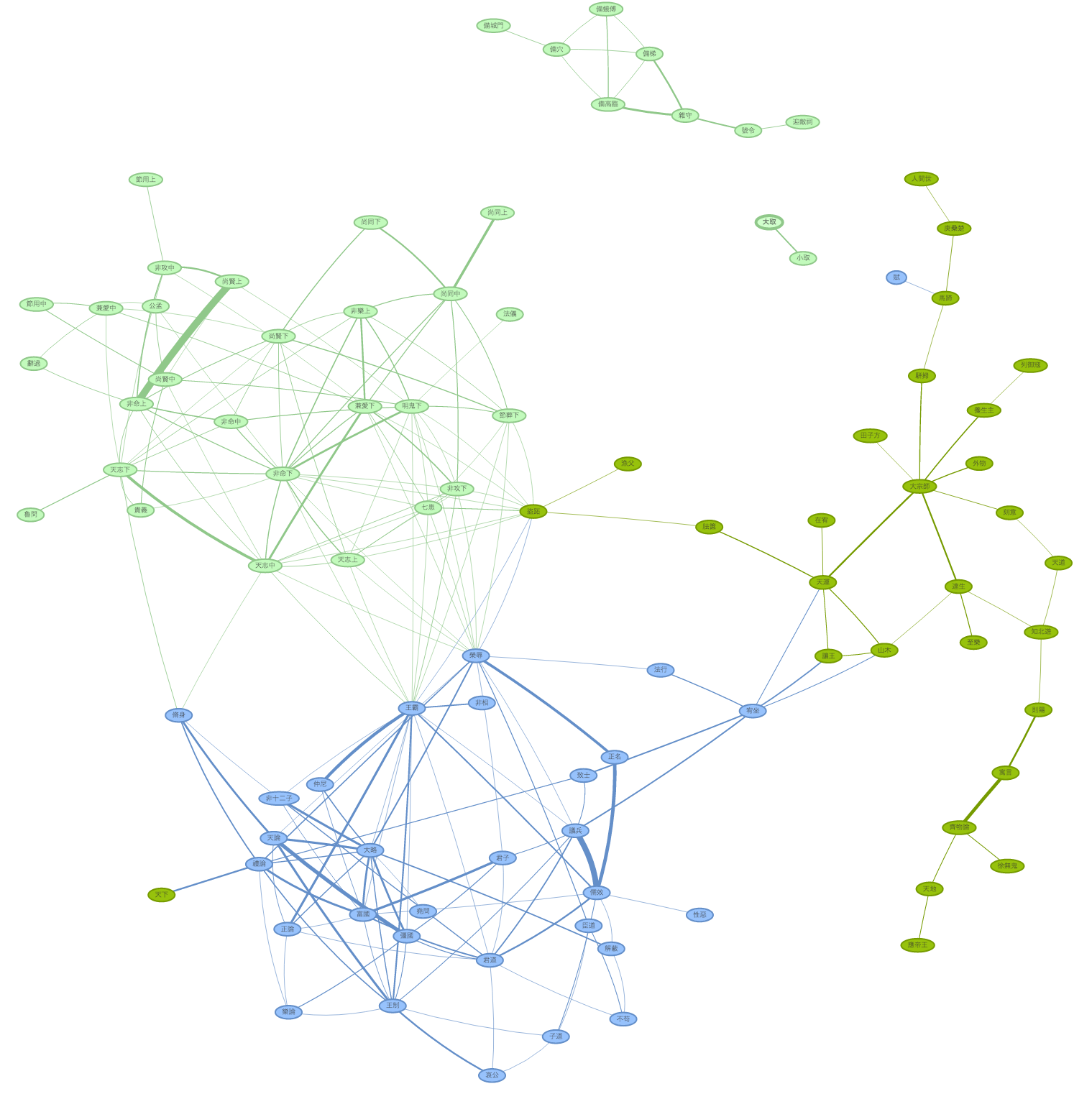

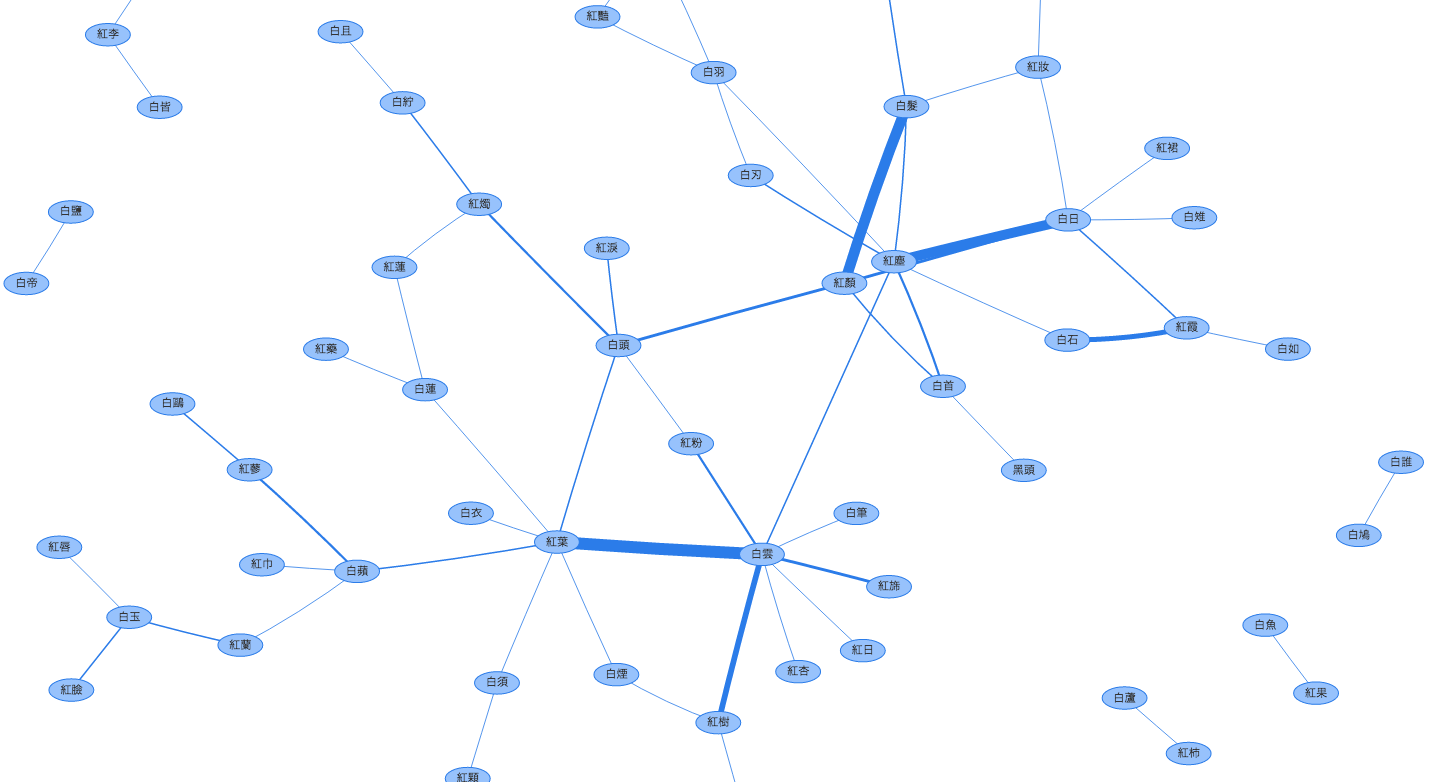

If we go back to the Regex tab and set “Group by” to “Paragraph”, we can visualize the relationships just like in the Analects example — except that this time we don’t need to specify a list of terms, rather these terms can be extracted using the pattern we specified as a regular expression (in this graph I have set “Skip edges with weight less than” to “2” to reduce clutter caused by pairs of terms that only ever occur once):

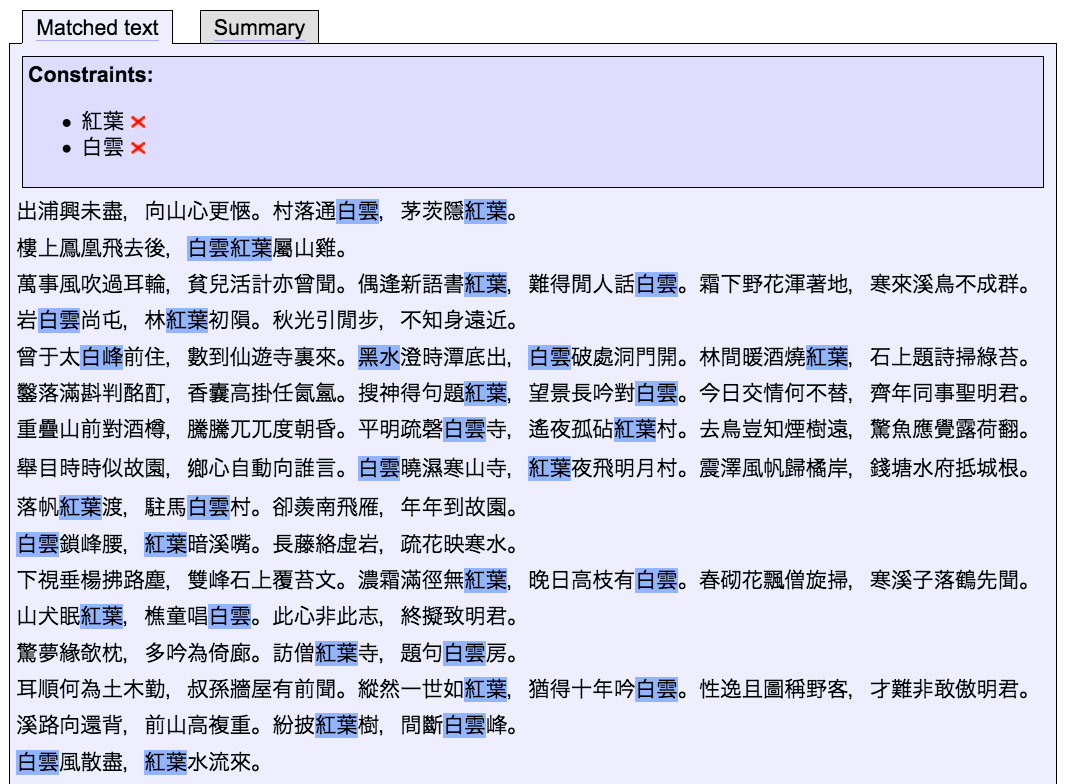

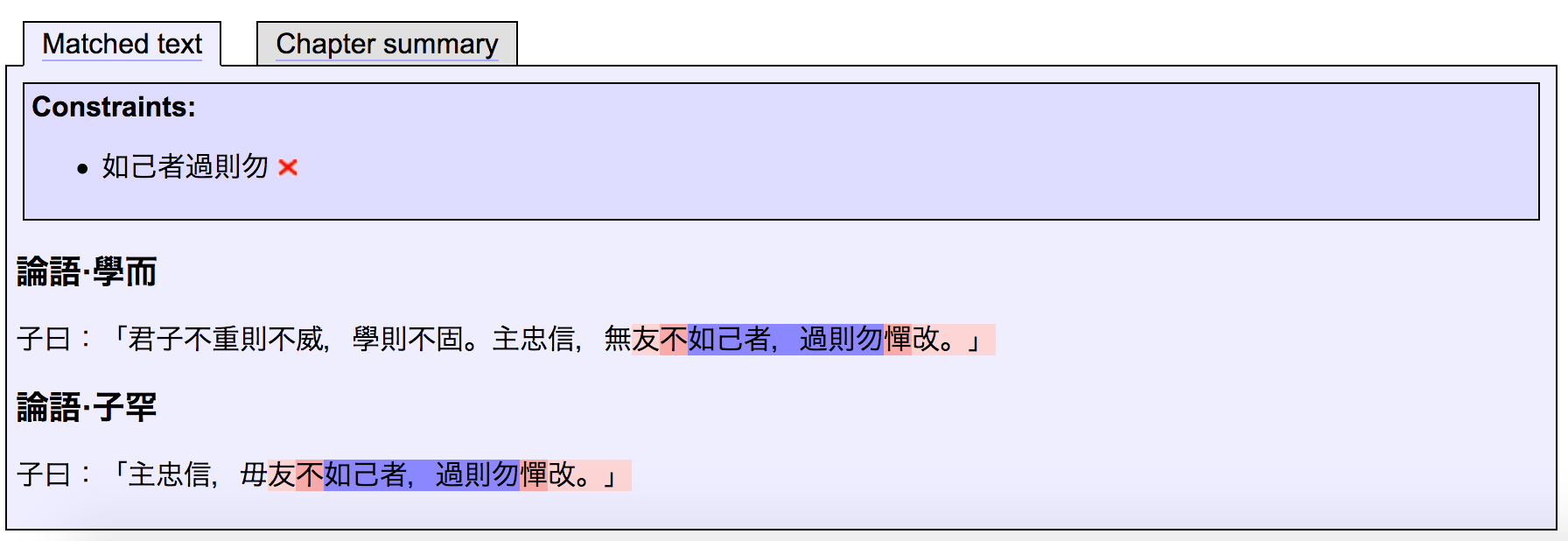

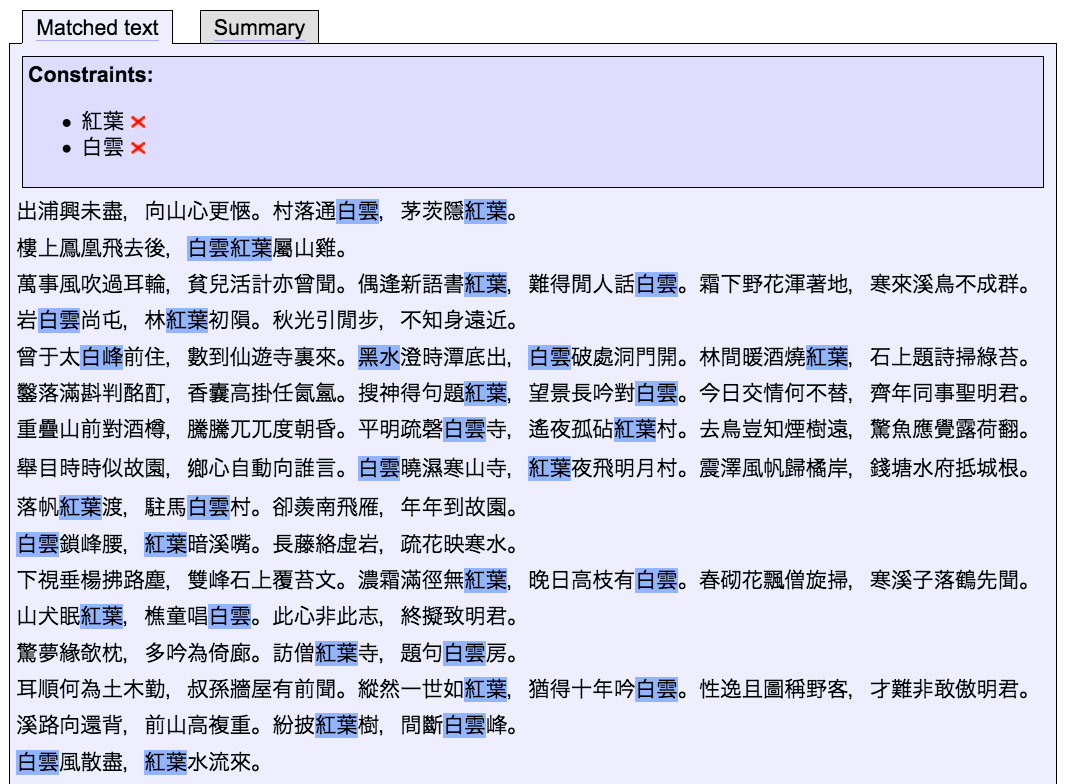

Although overall – as we can see from the bar chart above – combinations with “白” in them are the most common, the relational data shown in the graph above immediately highlights other features of the use of these color pairings: the three most frequent pairings in our data are actually pairings between “白” and “紅”, like “白雲” and “紅葉”, or “白髮” and “紅顏”. As before, our edges are linked to the data, so we can easily go back to the text to see how these are actually being used:

Regular expressions are a hugely powerful way of expressing patterns to search for in text — see the tutorial for more examples and a step-by-step walk-through.